Abstract

The Crew AI approach emphasizes the collaborative effort of multiple AI agents to achieve complex tasks. However, a paradigm shift is emerging where a single AI agent evolves to embody this collaborative architecture internally, through layers of subroutines, components, and refinements. This paper explores how a single AI agent can integrate Crew AI methodologies, leveraging enhanced modularity and self-supervisory mechanisms to simulate a network of agents. By examining the interplay of complexity, transparency, and refinement, this paper outlines how this approach mirrors and diverges from traditional Crew AI while offering a scalable solution to managing and improving artificial intelligence workflows.

1. Introduction

The concept of Crew AI, where multiple AI agents collaborate to achieve goals, has gained traction for its potential to solve complex problems that exceed the capacity of a single model. Yet, the practicalities of deploying, managing, and synchronizing multiple agents can introduce inefficiencies and scaling issues. Recent advancements suggest that a single AI agent can simulate the collaborative dynamics of Crew AI by incorporating layers of internal complexity and self-supervision. This paper examines how such an approach aligns with and departs from traditional Crew AI, emphasizing its ability to manage nuanced workflows through modular subroutines and supervisory structures.

2. The Crew AI Paradigm

2.1 Defining Crew AI

Crew AI refers to systems where multiple AI agents are assigned distinct but interdependent tasks, working collaboratively to achieve a unified goal. Each agent contributes specialized capabilities, fostering a system that is both versatile and resilient.

2.2 Benefits and Limitations

Benefits:

-

Specialization: Each agent can be tailored for specific functions.

-

Redundancy: Collaborative efforts reduce the likelihood of systemic failure.

Limitations:

-

Coordination Challenges: Synchronizing multiple agents introduces latency and complexity.

-

Scaling Issues: Increasing the number of agents often results in diminishing returns.

3. Evolution Toward a Unified Agent

3.1 Modular Architecture

A single AI agent can mimic Crew AI by adopting a modular architecture. This involves integrating distinct subroutines, each responsible for specific tasks. For example, a language model might include dedicated components for:

-

Content Generation: Producing text outputs based on prompts.

-

Validation: Cross-referencing outputs with reliable data sources.

-

Ethical Evaluation: Assessing the alignment of outputs with predefined ethical guidelines.

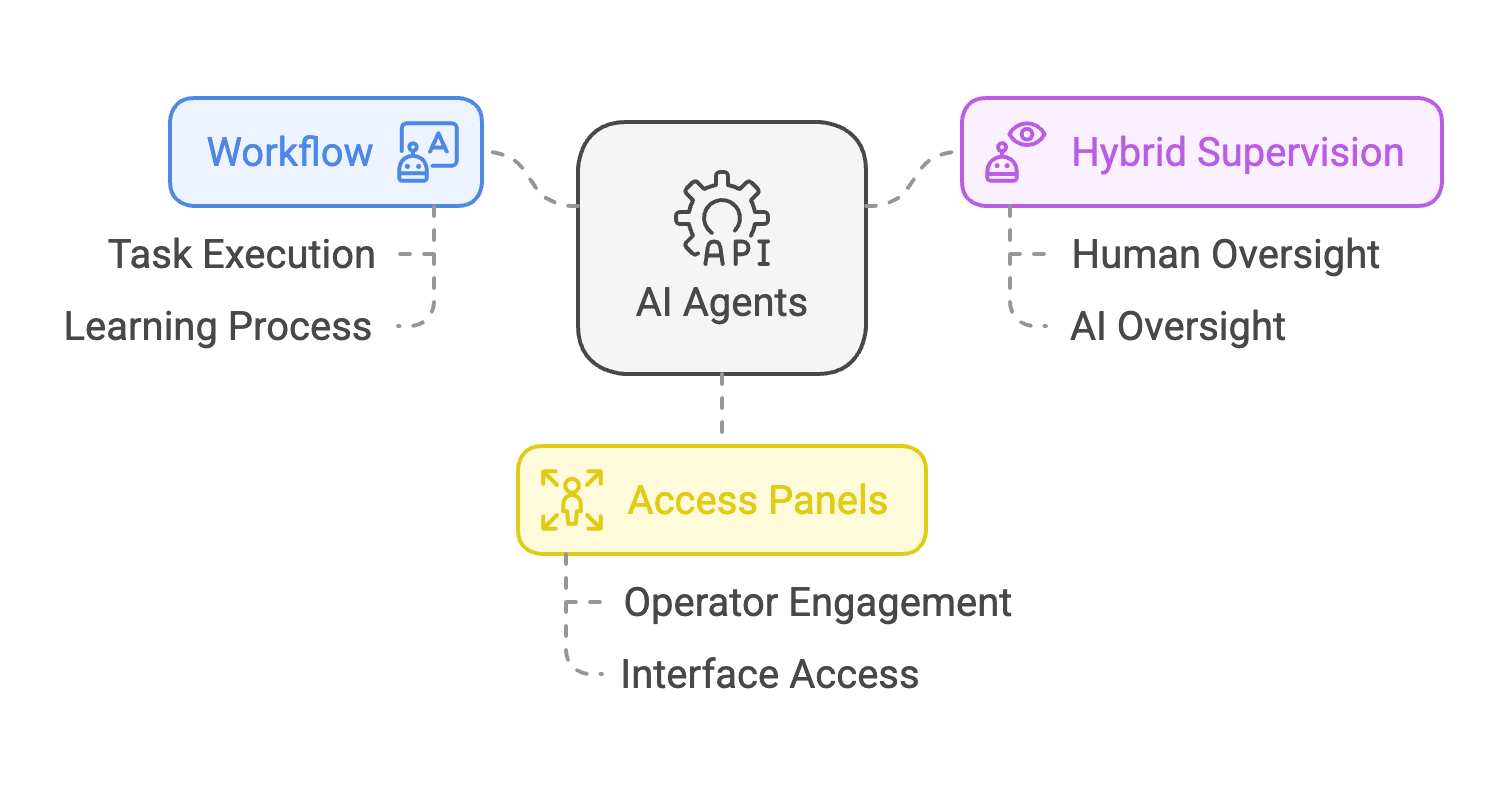

3.2 Supervisory Layers

To replicate the oversight traditionally provided by separate agents in a Crew AI system, a unified agent incorporates supervisory layers that:

-

Monitor the performance of internal subroutines.

-

Enforce consistency across outputs.

-

Adjust parameters dynamically based on feedback.

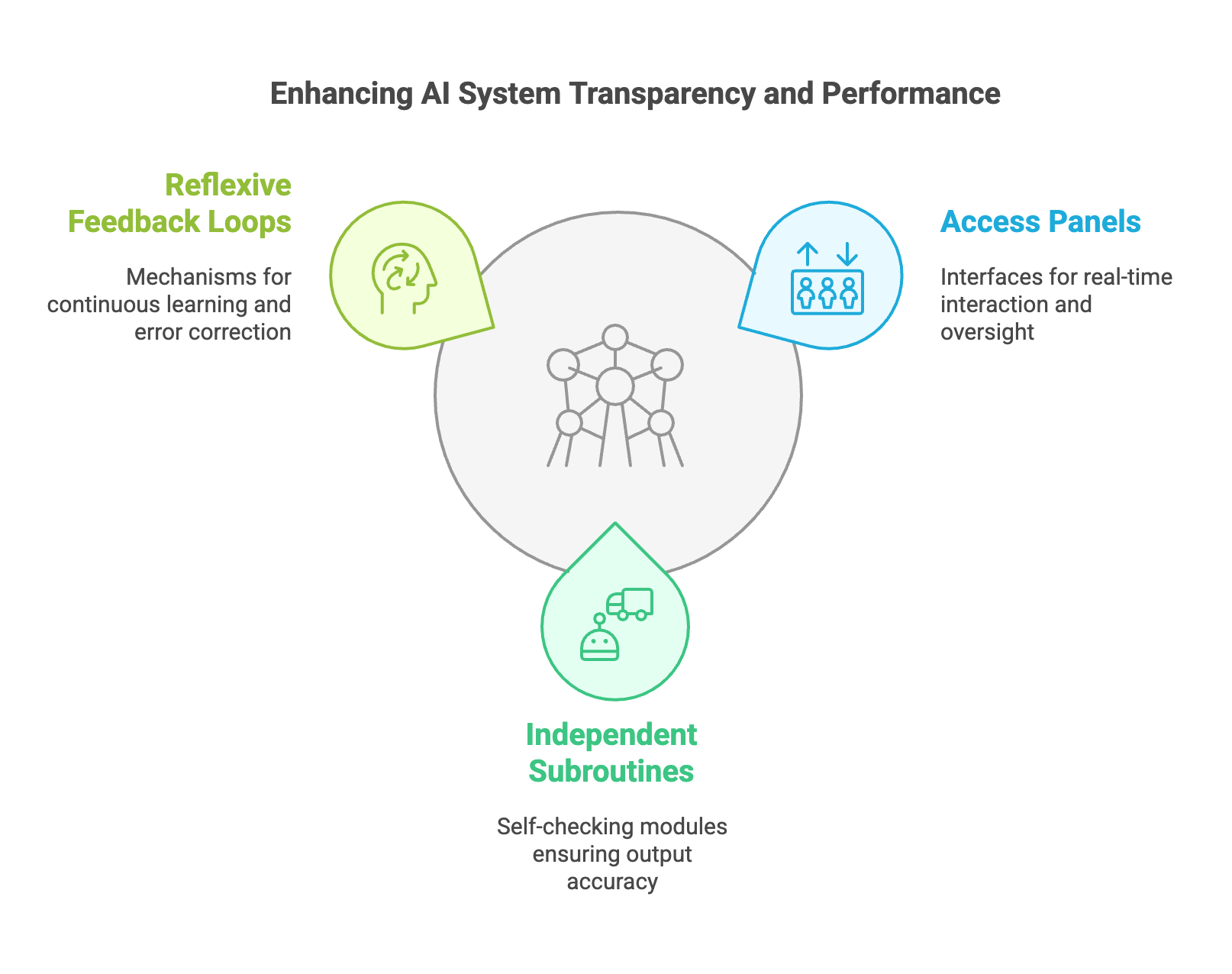

4. Access Panels: Transparency in a Unified System

4.1 Functionality

Access Panels, as introduced in the workflow hybrid supervision model, play a crucial role in enabling transparency within a single-agent framework. These interactive interfaces allow stakeholders to:

-

Inspect the operations of individual subroutines.

-

Modify parameters and prompts in real-time.

-

Review decision-making pathways for clarity and accountability.

4.2 Enhancing Collaboration

By providing a centralized platform for interaction, Access Panels facilitate a human-AI collaboration that mirrors the dynamics of Crew AI. Users can intervene, refine, and optimize workflows without needing to coordinate between multiple agents.

5. Self-Supervision and Reflexivity

5.1 Independent Subroutines

In a single-agent system, self-supervision is achieved by integrating independent subroutines that assess and validate the primary outputs. These subroutines function as internal checks and balances, akin to separate agents in a Crew AI framework.

5.2 Reflexive Feedback Loops

Reflexive feedback loops enable the agent to:

-

Continuously evaluate its performance.

-

Identify and rectify errors without external intervention.

-

Learn iteratively by incorporating feedback into its decision-making process.

6. Comparative Analysis

| Feature | Crew AI | Unified Single Agent |

|---|---|---|

| Structure | Multiple interacting agents | Modular subroutines |

| Coordination | Requires synchronization | Internally managed |

| Scalability | Limited by agent interactions | High, due to unified control |

| Transparency | Distributed across agents | Centralized via Access Panels |

| Error Mitigation | Redundancy through agents | Reflexive feedback loops |

7. Implementation Framework

7.1 Designing Internal Modularity

- Define Subroutines: Establish specialized components within the agent for tasks such as data validation, ethical evaluation, and content refinement.

- Integrate Supervisory Layers: Implement oversight mechanisms to monitor and manage subroutine performance.

- Develop Access Panels: Provide user interfaces for real-time interaction and transparency.

7.2 Training and Optimization

- Layered Training: Train subroutines independently before integrating them into the unified system.

- Feedback Integration: Use reflexive feedback loops to fine-tune performance.

- Stakeholder Involvement: Incorporate user input to align outputs with real-world needs.

8. Case Studies

8.1 Healthcare: Unified Diagnostic Systems

A healthcare provider deployed a single-agent AI system designed to emulate Crew AI functionality. Modular subroutines handled tasks such as patient data analysis, diagnosis validation, and treatment recommendations. Supervisory layers ensured consistency, while Access Panels allowed clinicians to intervene, resulting in improved diagnostic accuracy and reduced errors.

8.2 Financial Services: Fraud Detection

In a financial institution, a unified agent replaced a traditional Crew AI setup for fraud detection. Independent subroutines assessed transaction patterns, validated findings, and flagged anomalies. Reflexive feedback loops and Access Panels enabled analysts to refine the system, leading to a 30% reduction in false positives.

9. Ethical and Practical Considerations

9.1 Responsibility and Accountability

Centralizing complexity within a single agent raises questions about accountability. While Access Panels enhance transparency, organizations must ensure robust governance to prevent misuse.

9.2 Balancing Efficiency and Complexity

As unified agents grow more complex, maintaining efficiency becomes challenging. Regular audits and iterative optimizations are essential to balance sophistication with performance.

10. Conclusion

The evolution of AI from Crew-based systems to unified agents represents a natural progression in managing complexity. By integrating modular subroutines, reflexive feedback loops, and Access Panels, a single agent can replicate the collaborative dynamics of Crew AI while addressing its limitations. This approach offers a scalable, transparent, and efficient framework for advancing AI capabilities in diverse domains.

References

- Leibo, J. Z., et al. (2017). “Multi-agent reinforcement learning in sequential social dilemmas.” Proceedings of the 16th Conference on Autonomous Agents and MultiAgent Systems.

- Dietterich, T. G. (2000). “Ensemble methods in machine learning.” International Workshop on Multiple Classifier Systems.

- Binns, R. (2018). “Fairness in machine learning: Lessons from political philosophy.” Proceedings of the 2018 Conference on Fairness, Accountability, and Transparency.

- Doshi-Velez, F., & Kim, B. (2017). “Towards a rigorous science of interpretable machine learning.” arXiv preprint arXiv:1702.08608.

- Wachter, S., Mittelstadt, B., & Floridi, L. (2017). “Why a right to explanation of automated decision-making does not exist in the General Data Protection Regulation.” International Data Privacy Law.